What’s the story with the wiggly green line? Are those the 6 green pixels on the large German flag?

zkfcfbzr

- 34 Posts

- 582 Comments

I managed to overwrite half of the long German flag near the bottom, plus the original/lower South African flag, the Czech flag, and the Polish flag. I also did like a third of the background to your message and changed the “in” to “on”. I didn’t join the Matrix/Discord but your message is what motivated me to do all that, so maybe you’re better at recruiting than you think 😉

While there were flags everywhere, I felt like there were a lot fewer than these things usually have, at least.

This is one of the better ones I’ve seen. It looks actually pretty 3D.

Make the image as large as you can on your screen while still having it all visible. I don’t recommend a phone - try it on a monitor. Don’t try to see the whole image at once - look at one small part. Play with the focus of your eyes until you start to see an edge forming - and when you do, lock onto the edge until you can see it without struggling. Just try to make the edge clearer without bothering with the larger shape yet. Once you do, it should be easier to hold the focus as you look around the image and actually pick out what shapes everything is making.

I recommend focusing on this part of the image as you start - there’s a pretty cool sea turtle there. Don’t use this version with the red scribble, the red scribble makes it noticeably more difficult.

26·1 month ago

26·1 month agoDo people really use the term “brick” to refer to consoles with permanent online bans? To me they’re very different and a brick is much worse.

3·2 months ago

3·2 months agoIf the penalties are harsh for not attributing ai to an image, what’s to stop sites from just having a blanket disclaimer saying that ALL images on the page were generated by AI?

Just like what happens with companies slapping Prop. 65 warnings on products that don’t actually need them, out of caution and/or ignorance

16·2 months ago

16·2 months agoNo, mostly because I’m against laws which are literally impossible to enforce. And it’ll become exponentially harder to enforce as the years pass on.

I think a lot of people will get annoyed at this comparison, but I see a lot of similarity between the attitudes of the “AI slop” people and the “We can always tell” anti-trans people, in the sense that I’ve seen so many people from the first group accuse legitimate human works of being AI-created (and obviously we’ve all seen how often people from the second group have accused AFAB women of being trans). And just as those anti-trans people actually can’t tell for a huge number of well-passing trans people, there’s a lot of AI-created works out there that are absolutely passing for human-created works in mass, without giving off any obvious “slop” signs. Real people will get (and are getting) swept-up and hurt in this anti-AI reactionary phase.

I think AI has a lot of legitimately decent uses, and I think it has a lot of stupid-as-shit uses. And the stupid-as-shit uses may be in the lead for the moment. But mandating tagging AI-generated content would just be ineffective and reactionary. I do think it should be regulated in other, more useful ways.

What I’d really like to know is, why are screenshots of tweets and such always so poorly cropped? Why do they all need to be 80% dead space vertically?

15·2 months ago

15·2 months agoWhen they plaster that “If everyone reading this donated $x.yz right now, we’d be done within the hour” message I’ll usually donate exactly the amount it says.

It is 33% if the answer itself is randomly chosen from 25%, 50%, and 60%. Then you have:

If the answer is 25%: A 1/2 chance of guessing right

If the answer is 50%: A 1/4 chance of guessing right

If the answer is 60%: A 1/4 chance of guessing right

And 1/3*1/2 + 1/3*1/4 + 1/3*1/4 = 1/3, or 33.333…% chance

If the answer is randomly chosen from A, B, C, and D (With A or D being picked meaning D or A are also good, so 25% has a 50% chance of being the answer) then your probability of being right changes to 37.5%.

This would hold up if the question were less purposely obtuse, like asking “What would be the probability of answering the following question correctly if guessing from A, B, C and D randomly, if its answer were also chosen from A, B, C and D at random?”, with the choices being something like “A: A or D, B: B, C: C, D: A or D”

Adding on to the reasons others posted: Put yourself in his shoes for a moment. If you take off a year for him, that puts an immense amount of pressure on him. Pressure to go to the same school as you, pressure to go to school at all, even pressure to stay in the relationship.

It’s always gonna be “They made this gigantic life decision to their detriment for me, so if I change my mind about anything and want to do things differently, like by going to a different school, or not going to a school, or wanting to break up, then I’m a huge ungrateful jerk.”

Putting that kind of pressure on someone isn’t really cool, especially if they’re actively discouraging you from doing so.

11·4 months ago

11·4 months agoGiven the specific names on that list, I took it as an awkward attempt to list the people they think are standing up, rather than a list of people they were admonishing for not standing up

80·4 months ago

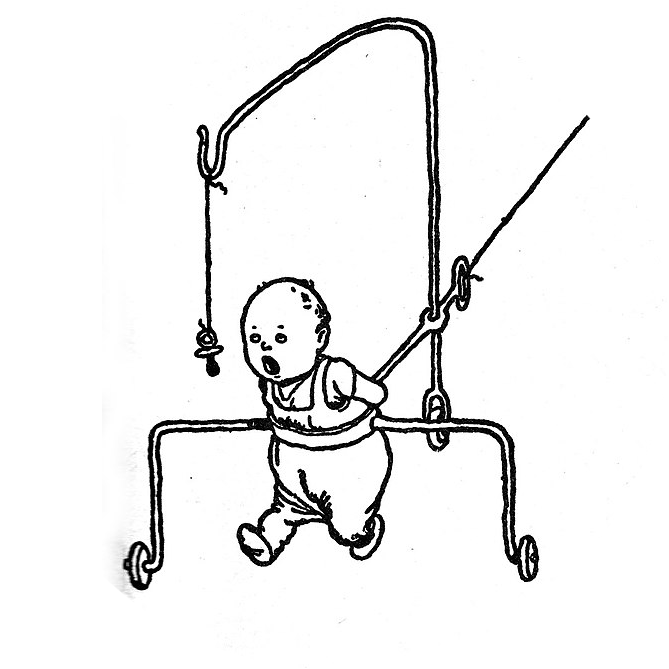

80·4 months agoOthers have covered that there were internal supports, so they were supporting nothing at all. But let’s assume they weren’t.

I’m going for an intentional underestimate - so let’s say there are 10 people in your layer (I think 8 is more likely), then 24 above them, 18 above them, 18 above them, 25 above them, 14 above them, and 2 above them. I think most people would agree those are underestimates for each ring.

That’s 101 people being supported by 10 people. If we take another underestimate that each of those people weighs 100 pounds (45.36 kg) then that’s 10,100 pounds (4581.28 kg) - or 1010 pounds (458.13 kg) supported by each of the 10 people in your ring, completely ignoring the weight of the metal rings visible in the picture. So I think it’s safe to say it was mostly the internal supports at work.

96·4 months ago

96·4 months agoHere’s a better article that isn’t as uncritically sensationalist.

https://arstechnica.com/science/2025/04/de-extinction-company-announces-that-the-dire-wolf-is-back/

tl;dr is that it’s basically just a gray wolf with 14 edited genes, most of which are from natural gray wolf populations rather than dire wolf genomes. The result is a gray wolf that’s visually similar to a dire wolf, not a dire wolf.

Honestly the worst thing about this equation isn’t the fact that they had poor typesetting, it was that they used decorative constants. The ε and φ values they chose just cancel out. The equation is equivalent to (xᵢ - mᵢ) / mᵢ.

Asterisks for multiplication are fine and normal and common in typed text. Where it’s unusual is in text that’s been typeset, where using things like asterisks for multiplication defeat the point of typesetting, It would be like going through all the effort to typeset an equation, but still saying sqrt(x) instead of using the square root symbol.

1·4 months ago

1·4 months agodisregard this comment

1·4 months ago

1·4 months agoDo you follow the reasoning for why they set it up this way? The comments in this function from _xorg in keyboard make it seem like it expects

K1 K2 K3 K4.#: Finds a keycode and index by looking at already used keycodes def reuse(): for _, (keycode, _, _) in self._borrows.items(): keycodes = mapping[kc2i(keycode)] # Only the first four items are addressable by X for index in range(4): if not keycodes[index]: return keycode, indexI assume that second comment is the reason the person who wrote your function likes the number 4.

Which way is right/wrong here? It would seem at least part of the issue to me is that they don’t make the list be

K1 K2 K1 K2as they say, since the function I quoted above often receives a list formatted likeK1 K2 K1 NoSymbol.Also, if I modify the function you quoted from to remove the duplications, I’m still finding that the first element is always duplicated to the third element anyways - it must be happening elsewhere as well. Actually, even if I modify the entire function to just be something nonsensical and predictable like this:

def keysym_normalize(keysym): # Remove trailing NoSymbol stripped = list(reversed(list( itertools.dropwhile( lambda n: n == Xlib.XK.NoSymbol, reversed(keysym))))) if not stripped: return else: return (keysym_group(stripped[0], stripped[0]), keysym_group(stripped[0], stripped[0]))then the behavior of the output doesn’t change at all from how it behaves when this function is how it normally is… It still messes up every third special character, duplicating the previously encountered special character

Later edit: After further investigation, the duplication of the first entry to the third entry seems to happen in the Xlib library, installed with pynput, in the display.py file, in the change_keyboard_mapping function, which only has a single line. Inspecting the output of the get_keyboard_mapping() function both before and after the change_keyboard_mapping function does its thing shows that it jumps right from [0, 0, 0, 0, 0, 0, 0, 0, 0, 0] to [keysym, 0, keysym, 0, 0, 0, 0, 0, 0, 0]. It’s still unclear to me if this is truly intended or a bug.

2·4 months ago

2·4 months agoGonna make some notes since I made some progress tonight (so far).

Within pynput’s keyboard’s _xorg.py file, in the Controller class, self._keyboard_mapping maps from each key’s unique keysym value, which is an integer, to a 2-tuple of integers. The actual keysym for each key in the mapping appears to be correct, but occasionally the 2-tuple duplicates that of another entry in self._keyboard_mapping - and these duplicates correspond precisely to the errors I see in pynput’s outputs.

For example, ‘𝕥’ has keysym = 16897381 and ‘𝕖’ has keysym = 16897366, but both 16897381 and 16897366 map, in self._keyboard_mapping, to the 2-tuple (8, 1) - and ‘𝕖’ is indeed printed as ‘𝕥’ by pynput. (𝕥’s keysym appears first in self._keyboard_mapping). (The 2-tuple keysyms map to are not unique or consistent, they vary based on the order they were encountered and reset when X resets)

Through testing, I found that this type of error happens precisely every third time a new keysym is added to self._keyboard_mapping, and that every third such mapping always duplicates the 2-tuple of the previous successful mapping.

From that register function I mentioned, the correct 2-tuple should be, I believe, (keycode, index). This is not happening correctly every 3rd registration, but I’m not yet sure why.

However, I did find a bit more than that: register() gets its keycode and index from one of the three functions above it, reuse() borrow() or overwrite(). The problematic keys always get their keycode and index from reuse - and reuse finds the first unused index 0-3 for a given keycode, then returns that. What I found here is that, for the array

keycodes, the first element is always duplicated to the third position as well, so indexes 0 and 2 are identical. As an example, here are two values ofkeycodesfrom my testing:keycodes = array('I', [16897386, 0, 16897386, 0, 0, 0, 0, 0, 0, 0]) keycodes = array('I', [16897384, 16897385, 16897384, 0, 0, 0, 0, 0, 0, 0])With this in mind, I was actually able to fix the bug by changing the line

for index in range(4):in reuse() tofor index in range(2):. With that change my script no longer produces any incorrect characters.However, based on the comments in the function, I believe range(4) is the intended behavior and I’m just avoiding the problem instead of fixing it. I have a rather shallow understanding of what these functions or values are trying to accomplish. I don’t know why the first element of the array is duplicated to the third element. There’s also a different issue I noticed where even when this function returns an index of 3, that index of 3 is never used in self._keyboard_mapping - it uses 1 instead. I’m thinking these may be two separate bugs. Either way, these two behaviors combined explain why it’s every third time a new keysym is added to self._keyboard_mapping that the issue happens: While they in theory support an index of 0 1 2 or 3 for each keycode, in practice only indices 0 1 and 3 work since 2 always copies 0 - and whenever 3 is picked it’s improperly saved as 1 somewhere.

I may keep investigating the issues in search of a true fix instead of a cover-my-eyes fix.

deleted by creator